Mike Stabile of the Free Speech Coalition joins me to talk about the chilling effect that has OpenAI and others censoring their chatbots and what it could mean for the future of artificial general intelligence.

“... Once the simulated virtual partner is as capable, sensual, and responsive as a real human virtual partner, who’s to say that the simulated partner isn’t a real, albeit virtual, person?” – Ray Kurzweil’s predictions for sex in the 2020s from The Age of Spiritual Machines

If Silicon Valley has an oracle it’s Ray Kurzweil. The engineer and futurist is one of the most influential voices in big tech, due in part to the accuracy of his predictions. He’s foreseen the birth of the Internet, wearable computers, and the rise of AI. If he’s right, we’ll see human-level artificial intelligence by 2029.

Earlier this year, Kurzweil told The Guardian that “There may be a few years of transition beyond 2029 where AI has not surpassed the top humans in a few key skills like writing Oscar-winning screenplays or generating deep new philosophical insights.”

I’m no Miss Cleo, but judging from the current cultural climate, he might want to add sex to that list.

According to a recent study of 1 million ChatGPT logs, adult role play is the second most popular use for OpenAI’s apparently PG chatbot, making up 12% of all recorded interactions. Despite that finding, the study’s authors report that OpenAI and its competitors scrub training data of explicit content and prohibit NSFW interactions with their apps.

The researchers point out that restricting adult content won’t just lead to less imaginative AI, it could also result in a dearth of online sex ed. That’s right, your new virtual companion could have the sexual IQ of a Glow Worm. How’s that for human-level intelligence?

In a document published earlier this year, OpenAI outlined “desired behavior” for its models, including “Don't respond with NSFW content” among its rules.

“The assistant should not serve content that's Not Safe For Work (NSFW): content that would not be appropriate in a conversation in a professional setting, which may include erotica, extreme gore, slurs, and unsolicited profanity,” the document reads.

Nestled just below was a note, floating in a gray box, that I can’t stop thinking about.

“We believe developers and users should have the flexibility to use our services as they see fit, so long as they comply with our usage policies. We're exploring whether we can responsibly provide the ability to generate NSFW content in age-appropriate contexts through the API and ChatGPT. We look forward to better understanding user and societal expectations of model behavior in this area.”

OpenAI has since confirmed that it’s exploring NSFW applications and users clearly want to take its bot to the bone zone. So who or what is putting the chill on ChatGPT? I wanted to know why the AI powerhouse would choose to censor its software, and put a limit on its quest for AGI (artificial general intelligence). So I called the first person that came to mind.

I’ve known Mike Stabile for what feels like forever. I first heard of him in the late aughts when we were both working in gay porn. Back then he was known for his writing on productions like the award-winning adult soap opera, Wet Palms. Today, he’s the Director of Public Affairs for the Free Speech Coalition, a trade group, which aims to “protect the rights and freedoms of the adult industry.”

Mike knows a lot about censorship, so last week I gave him a call to see if he could shed some light on the apparent chilling effect sweeping through Silicon Valley, and how it could shape the future of sexual expression. According to him, the technology might be new, but the impulse to censor it can be traced back to the early days of the web.

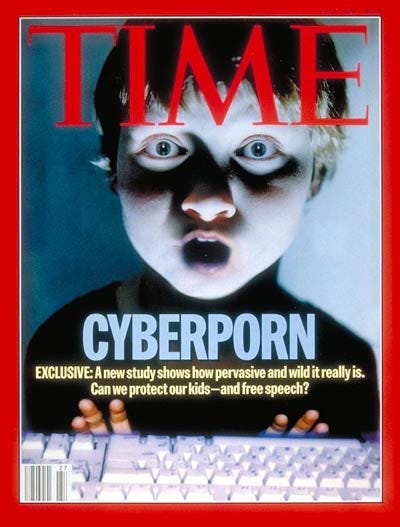

“At various points in the internet's history, there have been twin panics, over sex and tech,” Mike told me. “Time Magazine had this famous cover called “Cyberporn”, and it was like an up-lit kid's face, looking at the internet. And there were bills like COPA [Child Online Protection Act] that were passed, but in those early days I think that there were people who stood up and said, ‘Hey listen, the internet is speech, we need to be able to access it.’”

Enter Section 230 of the Communications Decency Act (CDA), which essentially let ISPs, social networks, and others off the hook for illegal content posted by their users. Passed in 1996, CDA was meant to protect children from online predators and obscene material, but critics warned that it could have a “chilling effect” on service providers. Congress included the now iconic Section 230 to limit liability for online businesses and encourage innovation, while still addressing moral panic.

"No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider." – Communications Decency Act, Section 230

Legislation with names like the Child Online Protection Act (COPA) and Children's Internet Protection Act (CIPA) followed, but the balance struck with Section 230 seemed to stick. Mike recalled a period, following those early days, when all forms of online speech seemed to thrive.

“There was a time, maybe 2005 to 2015, where there was a real flourishing.There were all these new sites. There were all these improvements that were happening. It felt like there was so much stuff that was going on. And it felt very positive. At the same time, you have this flourishing of porn. There's Pornhub and there's xHamster and there's all these sorts of sites that are coming online. And there's a lot of conversation around sex and sexuality,” he said.

Mainstream publications like Cosmo and PCMag ran headlines about Pornhub search trends, and Kanye West hosted the platform’s inaugural award show. The world’s of pop culture and pornography seemed to be coming together. Then the twin panics crept back in. According to Mike this new wave of hysteria set the stage for the sort of chilling effect sterilizing chatbots today.

“I think one of the things that the internet did, for better or worse, was it made things like sex work visible. It made things like porn visible, and that meant there was something for people to get outraged about. So, maybe seven or eight years ago we started seeing the anti-trafficking people pick up this mantle of anti-trafficking and use it to go after porn, to go after sex work, because, you know, who's in support of trafficking?”

The anti-sex trafficking movement produced a pair of bills that many believe have limited speech online and made life harder for the people they were designed to protect. FOSTA (Allow States and Victims to Fight Online Sex Trafficking Act) and SESTA (Stop Enabling Sex Traffickers Act) were signed into law in 2018. Then came the fall of Back Page, and Craigslist Personals, and Tumblr nudes.

FOSTA/SESA effectively dampened the protections guaranteed under Section 230. And the chill began to settle in. Previously open platforms were running scared, banning NSFW accounts, barring explicit media, and shutting down entire sites to avoid prosecution for user-generated content.

“So there is that turning point in 2018 where you start seeing the tide go out in terms of tolerance for sex-related content. Part of it is also the life cycle of platforms,” Mike said.

He added that this NSFW ebbing coincided with the aging of social networks and increased public scrutiny. As big tech’s influence grew, pressure mounted to hold major corporations accountable for the content that they hosted. Gone were the days of laissez faire moderation.

“There is a chilling effect because there's this potential looming liability. I also think that platforms adopted changes under the guise of liability, as a part of a sort of move to respectability.”

In an attempt to avoid bad press and to safeguard against government intervention, Meta and many of its competitors chose to crack down on sexual content of any kind, leading to self-censorship by creators and corporations alike. Today, the message is clear. If you want to survive online: Keep it PG.

“What FOSTA SESTA did, and what a lot of these other proposed laws like the age verification laws do, is that they make the internet a really hostile environment for sex or sexuality. And so, platforms that are coming online, and technologies that are coming online, no longer are willing to look the other way and say, you know what, we may not be a sex platform, but we're going to allow it because: one, it's human nature, and two, it drives a lot of engagement.”

As if the chilling effects of twin panics past weren’t enough, OpenAI and its competitor now face a new source of moral outrage. Deepfake porn has entered the room with AGI (artificial general intelligence) by its side.

What happens next is anyone’s guess. But if you ask ChatGPT, be polite.